I’m happy to report that the readings and lecture this week caused much less of a crisis of confidence than the last two. Terms and expectations were reasonably clear, the workload was manageable, and I was even able to think ahead to start considering future assignments. I do still feel a fair amount of insecurity because so many people in the class seem like they already know what they are doing - several of the class members are already foresight professionals, and a few others are researchers, which was especially relevant for this week.

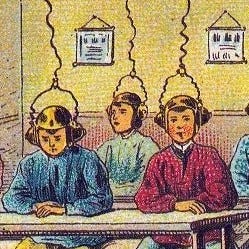

“Research” here is meant pretty broadly. Last week I used the term to refer to the digging around that feeds the current assessment, but the more interesting type of research relates to the study and extraction of the bits of potential futures that we find floating around the present1. This research can be qualitative or quantitative, and it can be secondary (reading and digesting content others have created) or primary (creating new knowledge). This week’s focus was on the many forms of primary research in Futures, meaning the ways we generate data and information ourselves.

Because all of these research modalities are fundamentally human endeavors, this makes them subject to cognitive bias - the predictable blind spots of the human brain. This article gives a good basic list of some cognitive biases that can color research work. I’d like to draw special attention to observational selection bias as a risk for futurists hunting for weak signals - it’s easy to start seeing tiny bits of potential futures everywhere you go and become convinced that a big change is right around the corner2.

The first type of research, and one that might overcome many of these biases, is quantitative analysis, since doing math on a set of numbers is a formal, dispassionate exercise3. We read an interesting article from Anne Boysen about how this could be helpful in futures4. I studied quantitative subjects in school5 and have a professional background in data analytics, so I know my way around a database, and the biggest problem is that the data is almost always about something that already happened. Three subcategories might be fruitful exceptions: forecasting is an option, but it is really only useful until the behavior-generating system changes6; the general role of predictive analytics is to guess the outcome of specific cases, and, though they can surface hidden relationships, their utility for foresight is limited; Natural Language Processing (including Large Language Models aka LLMs) has a lot more promise, because it can extract at scale what people are saying that’s relevant to the future (topics, sentiment, etc) - whether that text comes from some of the techniques below, or as secondary analysis of things like news articles, social media posts, etc.

The next kind of primary research is collecting survey data. I have done a bit of survey design, and a fair amount of survey administration7, so this isn’t new to me. The keys are to get the right kind of sample to give you the information you’re looking for (do you want the views of the general public, academics, people in the field, etc), and to ask everyone the same questions (usually with a closed set of possible responses) so the results can be aggregated.

Next comes interviews. I’ve interviewed lots of people informally, from a short-lived podcast with frequent guests8 to an interview of my professor from last semester. However, doing interviews for research is much more structured9. The research protocol, including the intended questions, serves as the foundation for the interviews and a common point of understanding among the research team10, and sharing the questions with the subjects ahead of time can improve the thoughtfulness of the responses as well as reduce uncertainty11. For futures research specifically, the questions should relate to what forces are driving the topic in question and where it might go; giving the subject space to speculate can push them to the edge of their experience and knowledge and result in richer answers that uncover more leads about weak signals etc. Interviews can also be used in conjunction with other futures activities - for example, participants in a workshop about the future of an industry or an organization could be interviewed before the workshop begins - this not only provides data that might be a useful input, but also warms up participants for the activities to come.

One more to throw in here: ethnography, or systematically studying the behavior and activity of a particular group, the kind that groups like IDEO do for companies. Deeply understanding the way something works in the present can help with the work of imagining ways things might be different in the future (this may be covered much more deeply in the Design Futures class).

There are lots of interesting software tools that the research pros in the class recommended. Many of them were basically interview management platforms with some amount of automatic analysis, and I don’t have any experience to recommend one vs another, but there was something I hadn’t heard of (a weak signal, if you will). Synthetic Users uses LLMs to create fake people matching a profile you make up, then ask them your questions (geared toward product design). Based on my own experience with LLMs, this seems like a good way to generate plausible responses, and to find areas of ambiguity in a survey or interview where your questions aren’t taken the way you intend. This probably goes without saying, but there are a few more things that would have to happen for this to be a valid source of research data.

For my project on the future of religion in the US, I’m planning on doing interviews with some of the people whose names I’ve been seeing crop up in relevant articles, leaders of interfaith groups, and/or hospital chaplains12.

Unsurprising, given the name of the class…

Nuclear fusion as an energy source is the classic example here.

Fully aware of the bias that creeps into data analysis, as well as “researcher degrees of freedom”, etc, but it’s as close as we can get.

Unfortunately, unlike most other articles in the field, I cannot find a copy or a reasonable facsimile on the open internet.

Math, Statistics, and Economics.

I did COVID forecasting in 2020 and 2021 and it was a constant exercise in frustration.

One of my jobs in high school was working as a pollster for Gallup. It was difficult work, and I rarely beat my quota, but now I’m definitely in the upper echelons of my age bracket for willingness to talk to people on the phone.

Kitchen Table Netrunner was a great vehicle for learning audio editing, and for breaking into a cool community (it’s surprising how many people will talk to you when you have a microphone).

I assume this is partly due to human research protections and partly due to the desire to compare results and to answer a predefined research question.

Hypothetically - unsurprisingly, for this class I am a team of one.

It can also be helpful to have a “position document” that subjects can read and react to, for example if there are existing visions or plans in the space.

Chaplains in particular seem uniquely connected to the on-the-ground experience of a cross-section of the population.